Data Meets Dynamics: Workshop on Data Assimilation for Complex Systems and Applications coming to Wisconsin in August 2025

IFDS is excited to announce a two-day NSF-supported workshop hosted by the Institute for Foundations of Data Science (IFDS) at the University of Wisconsin-Madison. The workshop, titled “Data Meets Dynamics: Workshop on Data Assimilation for Complex Systems and Applications,” will take place on August 21–22, 2025 (Thursday–Friday). This event is supported by NSF and IFDS.

This event is a collaboration between the IFDS at UW-Madison and the Data Science Center at Brigham Young University (BYU), a key partner of the UW IFDS. More details about the workshop can be found on our website:

The workshop will feature a range of activities, including oral presentations, poster sessions, and lightning talks, offering students and junior researchers an excellent opportunity to showcase their work. We encourage you to share this announcement with your department, your group members, or junior researchers who are interested in attending.

As the workshop is supported by the NSF, we are pleased to offer partial travel funding to selected participants. Additionally, there is no registration fee for this event. To apply for travel support and provide information about your participation, please complete the following form no later than March 1:

This workshop brings together researchers and practitioners to explore the broad landscape of data assimilation, emphasizing both theoretical foundations and practical applications. On the theoretical front, the workshop will delve into topics such as nudging data assimilation and its connections to partial differential equations (PDEs), control theory, and error analysis. For practical methods, we will highlight a range of Bayesian data assimilation techniques, including the ensemble Kalman filter and the particle filter, which represent discrete-in-time approaches. Continuous-intime frameworks, such as nudging methods, conditional Gaussian nonlinear data assimilation, and the ensemble Kalman-Bucy filter, will also be discussed, with real-world applications in climate science, atmospheric and ocean modeling, and engineering systems. A key focus of the workshop is to strengthen interdisciplinary connections between data assimilation and tools such as machine learning, stochastic models, parameter estimation, optimal control, and model identification. By fostering discussions among different communities, the event aims to bridge gaps between theory and practice, encourage collaboration, and inspire new research directions. Additionally, the workshop will provide an excellent opportunity for young researchers to gain exposure to various methods, equipping them with tools to address challenges in complex dynamical systems.

Former IFDS Wisc RA Named Rising Star in Machine Learning

Ying Fan, a former IFDS Wisc RA advised by Kangwook Lee, has been selected as a 2024 Rising Star in Machine Learning. The award recognizes her outstanding achievements and potential in advancing the field of machine learning. She was invited to deliver a talk at the Rising Star in Machine Learning series, held on December 5 and 6 at the University of Maryland, College Park.

IFDS members involved in new AI institute

UChicago and UW-Madison are part of a new AI Institute for the SkAI that has foundational components stemming from IFDS research

PI Willett Receives SIAM Activity Group on Data Science Career Prize

IFDS PI Rebecca Willett was the recipient of the SIAM Activity Group on Data Science Career Prize at the 2024 SIAM Conference on Mathematics of Data Science (MDS24). Link: https://www.siam.org/publications/siam-news/articles/2024-october-prize-spotlight/

Andrew Lowy

Andrew Lowy (alowy@wisc.edu, https://sites.google.com/view/andrewlowy) joined the University of Wisconsin-Madison (UW-Madison) in September 2023 as an IFDS postdoc with Stephen J. Wright. Before joining IFDS at UW-Madison, he obtained his PhD in Applied Math at University of Southern California under the supervision of Meisam Razaviyayn, where he was awarded the 2023 Center for Applied Mathematical Sciences (CAMS) Graduate Student Prize for excellence in research with a substantial mathematical component.

Andrew Lowy (alowy@wisc.edu, https://sites.google.com/view/andrewlowy) joined the University of Wisconsin-Madison (UW-Madison) in September 2023 as an IFDS postdoc with Stephen J. Wright. Before joining IFDS at UW-Madison, he obtained his PhD in Applied Math at University of Southern California under the supervision of Meisam Razaviyayn, where he was awarded the 2023 Center for Applied Mathematical Sciences (CAMS) Graduate Student Prize for excellence in research with a substantial mathematical component.

Andrew’s research interests lie in trustworthy machine learning and optimization, with a focus on privacy, fairness, and robustness. His main area of expertise is differentially private optimization for machine learning. Andrew’s work characterises the fundamental statistical and computational limits of differentially private optimization problems that arise in modern machine learning. He also develops scalable optimization algorithms that attain these limits.

IFDS at University of Washington Welcomes New Postdoctoral Fellows for 2024-2025

At the University of Washington, several new IFDS postdoctoral fellows have recently joined us. We are excited to welcome them to IFDS!

Natalie Frank

Washington

Mo Zhou

Washington

Libin Zhu

Washington

Natalie Frank

Natalie Frank (natalief@uw.edu) https://natalie-frank.github.io/ joined the University of Washington as a Pearson and IFDS Fellow in September 2024 working with Bamdad Hosseini and Maryam Fazel. Prior to joining the University of Washington, she obtained her Ph.D. in Mathematics at NYU advised by Jonathan Niles-Weed.

Natalie Frank (natalief@uw.edu) https://natalie-frank.github.io/ joined the University of Washington as a Pearson and IFDS Fellow in September 2024 working with Bamdad Hosseini and Maryam Fazel. Prior to joining the University of Washington, she obtained her Ph.D. in Mathematics at NYU advised by Jonathan Niles-Weed.

Natalie researches the theory of adversarial learning using tools from analysis, probability, optimization, and PDEs. During her PhD, she focused on studying adversarial learning without model assumptions. Some topics she would like to explore at UW include leveraging convex optimization to enhance adversarial training, using these insights to perform distributionally robust learning for PDEs, and understanding how these topics interact with flatness of minimizers.

Mo Zhou

Mo Zhou (mozhou717@gmail.com) https://mozhou7.github.io is an IFDS postdoc (started October 2024) at the University of Washington working with Simon Du and Maryam Fazel. He received his Ph.D. in Computer Science from Duke University, advised by Rong Ge.

Mo Zhou (mozhou717@gmail.com) https://mozhou7.github.io is an IFDS postdoc (started October 2024) at the University of Washington working with Simon Du and Maryam Fazel. He received his Ph.D. in Computer Science from Duke University, advised by Rong Ge.

Mo’s research focuses on the mathematical foundations of machine learning, particularly in optimization related deep learning theory. His work involves analyzing the training dynamics of overparametrized models in the feature learning regime to uncover underlying mechanisms. He also investigates phenomena observed in deep learning practice by theoretically analyzing simplified models and making predictions based on these insights.

Libin Zhu

Libin Zhu (libinzhu@uw.edu) https://libinzhu.github.io/ joined the University of Washington in July 2024. He is an IFDS postdoc scholar working with Dmitriy Drusvyatskiy and Maryam Fazel. He received my PhD in Computer Science from UCSD, advised by Mikhail Belkin.

Libin Zhu (libinzhu@uw.edu) https://libinzhu.github.io/ joined the University of Washington in July 2024. He is an IFDS postdoc scholar working with Dmitriy Drusvyatskiy and Maryam Fazel. He received my PhD in Computer Science from UCSD, advised by Mikhail Belkin.

Libin’s research focuses on the optimization and mathematical foundations of deep learning. He has worked on the dynamics of neural networks under kernel regime and feature learning regime. He plans to investigate the mechanism of how neural networks learn features and develop machine learning models that can explicitly learn features.

Washington Adds 5 New RAs

At IFDS at the University of Washington, we are excited to introduce our cohort of 5 new research assistants in the fall 2024 quarter. Our RAs collaborate across disciplines on IFDS research. Each is advised by a primary and a secondary adviser, who are core members or affiliates of IFDS.

Weihang Xu

Washington

Qiwen Cui

Washington

Facheng Yu

Washington

Begoña García Malaxechebarría

Washington

Garrett Mulcahy

Washington

Weihang Xu

Weihang Xu (Computer Science and Engineering) works with Simon S. Du (Computer Science and Engineering) and Maryam Fazel (Electrical and Computer Engineering). He is interested in optimization theory and the physics of deep learning models. His current research focuses on theories of Neural Networks, clustering algorithms, and the attention mechanism.

Weihang Xu (Computer Science and Engineering) works with Simon S. Du (Computer Science and Engineering) and Maryam Fazel (Electrical and Computer Engineering). He is interested in optimization theory and the physics of deep learning models. His current research focuses on theories of Neural Networks, clustering algorithms, and the attention mechanism.

Qiwen Cui

Qiwen Cui (Computer Science and Engineering) works with Simon S. Du (Computer Science and Engineering) and Maryam Fazel (Electrical and Computer Engineering). He is interested in reinforcement learning theory, in particular the multi-agent setting. He is also interested in the role of RLHF in the performance of LLM.

Qiwen Cui (Computer Science and Engineering) works with Simon S. Du (Computer Science and Engineering) and Maryam Fazel (Electrical and Computer Engineering). He is interested in reinforcement learning theory, in particular the multi-agent setting. He is also interested in the role of RLHF in the performance of LLM.

Facheng Yu

Facheng Yu (Statistics) works with Zaid Harchaoui (Statistics) and Alex Luedtke (Statistics) on learning theory and semi-parametric models. His current research focuses on orthogonal statistical learning and stochastic optimization. He is also interested in high-dimensional statistics and non-asymptotic statistical inference.

Facheng Yu (Statistics) works with Zaid Harchaoui (Statistics) and Alex Luedtke (Statistics) on learning theory and semi-parametric models. His current research focuses on orthogonal statistical learning and stochastic optimization. He is also interested in high-dimensional statistics and non-asymptotic statistical inference.

Begoña García Malaxechebarría

Begoña García Malaxechebarría (Mathematics) works with Dmitriy Drusvyatskiy (Mathematics) and Maryam Fazel (Electrical and Computer Engineering). She is interested in the optimization and mathematical foundations of deep learning. Her current research focuses on analyzing and scaling limits of stochastic algorithms for large-scale problems in data science.

Begoña García Malaxechebarría (Mathematics) works with Dmitriy Drusvyatskiy (Mathematics) and Maryam Fazel (Electrical and Computer Engineering). She is interested in the optimization and mathematical foundations of deep learning. Her current research focuses on analyzing and scaling limits of stochastic algorithms for large-scale problems in data science.

Garrett Mulcahy

Garrett Mulcahy (Mathematics) works with Soumik Pal (Mathematics) and Zaid Harchaoui (Statistics) on problems at the intersection of optimal transport and machine learning. He is currently working on small time approximations of entropic regularized optimal transport plans (i.e. Schr\’’{o}dinger bridges). Additionally, he is interested in the statistical estimation of optimal transport-related quantities.

Garrett Mulcahy (Mathematics) works with Soumik Pal (Mathematics) and Zaid Harchaoui (Statistics) on problems at the intersection of optimal transport and machine learning. He is currently working on small time approximations of entropic regularized optimal transport plans (i.e. Schr\’’{o}dinger bridges). Additionally, he is interested in the statistical estimation of optimal transport-related quantities.

Tianxiao Shen

Tianxiao Shen is an IFDS postdoc scholar at the University of Washington, working with Yejin Choi and Zaid Harchaoui. Previously, she received her PhD from MIT, advised by Regina Barzilay and Tommi Jaakkola. Before that, she did her undergrad at Tsinghua University, where she was a member of the Yao Class.

Tianxiao Shen is an IFDS postdoc scholar at the University of Washington, working with Yejin Choi and Zaid Harchaoui. Previously, she received her PhD from MIT, advised by Regina Barzilay and Tommi Jaakkola. Before that, she did her undergrad at Tsinghua University, where she was a member of the Yao Class.

Tianxiao has broad interests in natural language processing, machine learning, and deep learning. More specifically, she studies language models and develops algorithms to facilitate efficient, accurate, diverse, flexible, and controllable text generation.

Hanbaek Lyu paper featured in Nature Communications

Hanbaek Lyu, Assistant Professor of Mathematics, University of Wisconsin–Madison and IFDS faculty, was published in the January 3, 2023 issue of Nature Communications. His article Learning low-rank latent mesoscale structures in networks is summarized below.

Hanbaek Lyu, Assistant Professor of Mathematics, University of Wisconsin–Madison and IFDS faculty, was published in the January 3, 2023 issue of Nature Communications. His article Learning low-rank latent mesoscale structures in networks is summarized below.

Introduction:

We present a new approach to describe low-rank mesoscale structures in networks. We find that many real-world networks possess a small set of `latent motifs’ that effectively approximate most subgraphs at a fixed mesoscale. Our work has applications in network comparison and network denoising.

Content:

Researchers in many fields use networks to encode interactions between entities in complex systems. To study the large-scale behavior of complex systems, it is useful to examine mesoscale structures in networks as building blocks that influence such behavior. In many studies of mesoscale structures, subgraph patterns (i.e., the connection patterns of small sets of nodes) have been studied as building blocks of network structure at various mesoscales. In particular, researchers often identify motifs as k-node subgraph patterns (where k is typically between 3 and 5) of a network. These patterns are unexpectedly more commonthan in a baseline network (which is constructed through a random process). In the last two decades, the study of motifs has yielded insights into networked systems in many areas, including biology, sociology, and economics. However, not much is known about how to use such motifs (or related mesoscale structures), after their discovery, as building blocks to reconstruct a network.

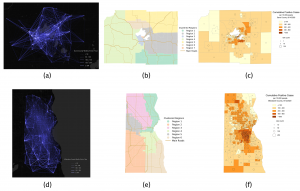

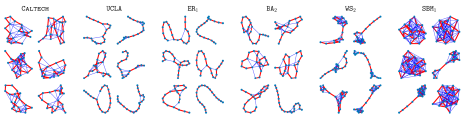

Networks have low-rank subgraph structures

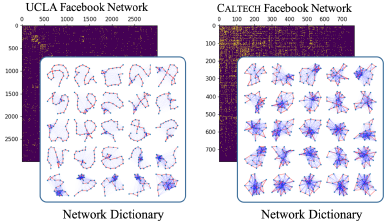

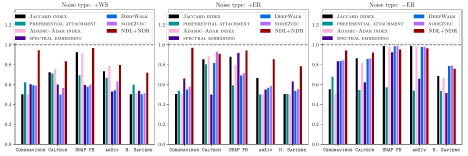

The figure above shows examples of 20-node subgraphs that include a path (in red) that spans the entire subgraph. These subgraphs are subsets of various real-world and synthetic networks. The depicted subgraphs have diverse connection patterns, which depend on the structure of the original network. One of our key findings is that, roughly speaking, thesubgraphs have specific pattern. Specifically, we find that many real-world networks possess a small set of latent motifs that effectively approximate most subgraphs at a fixed mesoscale. The figure below shows “network dictionaries” of 25 latent motifs for Facebook “friendship” networks from the universities UCLA and Caltech Facebook. By using subgraph sampling and nonnegative matrix factorization, we are able to discover these latent motifs.

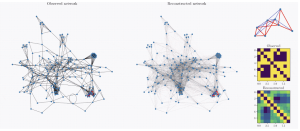

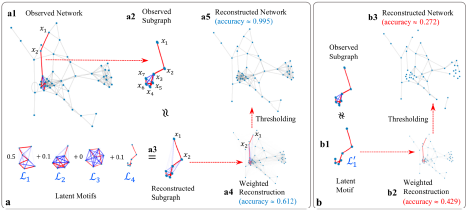

The ability to encode and reconstruct networks using a small set of latent motifs has many applications in network analysis, including network comparison, network denoising, and edge inference. Such low-rank mesoscale structures allow one to reconstruct networks by approximating subgraphs of a network using combinations of latent motifs. In the animation below, we demonstrate our network-reconstruction algorithm using latent motifs. First, we repeatedly sample a k-node subgraph by sampling a path of k nodes. We then use the latent motifs to approximate the sampled subgraph. (See panels a1–a3 in the figure below.) We then replace the original subgraph with the approximated subgraph. By doing this over and over, we gradually form a weighted reconstructed network. The weights indicate how confident we are that the associated edges of the reconstructed network are also edges of the original observed network.

Selecting appropriate latent motifs is essential for our approach. These latent motifs act as building blocks of a network; they help us recreate different parts of it. If we don’t pick an appropriate set of latent motifs, we cannot expect to accurately capture thestructure of a network. This is analogous tousing the correct puzzle pieces toassemble a picture If weuse the wrong pieces, we will assemble a picture that doesn’t match original picture.

Motivating Application: Anomalous-subgraph detection

A common problem in network analysis is the detection of anomalous subgraphs of a network. The connection pattern of an anomalous subgraph distinguishes it from the rest of a network. This anomalous-subgraph-detection problem has numerous high-impact applications, including in security, finance, and healthcare.

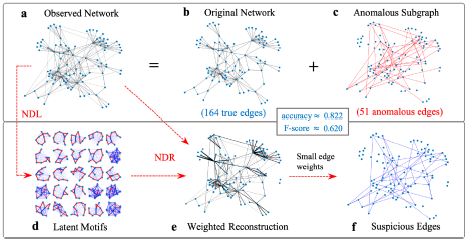

A simple conceptual framework for anomalous-subgraph detection is the following: Learn “normal subgraph patterns” in an observed network and then detect subgraphs in the observed network that deviate significantly from them. We can turn this high-level idea into a concrete algorithm by using latent motifs and network reconstruction, as the figure above illustrates. From an observed network (panel a), which consists of the original network (panel b) and an anomalous subgraph (panel c), we compute latent motifs (panel d) that can successfully approximate the k-node subgraphs of the observed network. A key observation is that these subgraphs should also describe the normal subgraph patterns of the observed network. Reconstructing the observed network using its latent motifs yields a weighted network (panel e) in which edges with positive and small weights deviate significantly from the normal subgraph patterns, which are captured by the latent motifs. Therefore, it is likely that these edges are anomalous. The suspicious edges (panel f) are the edges in the weighted reconstructed network that have positive weights that are less than a threshold. One can determine the threshold using a small set of known true edges and known anomalous edges. The suspicious edges match well with the anomalous edges (panel c).

Our method can also be used for “link-prediction” problems, in which one seeks to figure out the most likely new edges given an observed network structure. The reasoning is similar to that for anomalous-subgraph detection. We learn latent motifs from an observed network and use them to reconstruct the network. The edges with the largest weights in the reconstructed network that were non-edges in the observed network are our predictions as the most likely edges. As we show in the figure below, our latent-motif approach is competitive with popular methods for anomalous-subgraph detection and link prediction.

Conclusion

We introduced a mesoscale network structure, which we call latent motifs, that consists of k-node subgraphs that are building blocks of the connected k-node subgraphs of a network. By using combinations of latent motifs, we can approximate k-node subgraphs that are induced by uniformly random k-paths of a network. We also established algorithmically and theoretically that one can accurately approximate a network if one has a dictionary of latent motifs that can accurately approximate mesoscale structures of the network.

Our computational experiments demonstrate that latent motifs can have distinctive network structures and that various social, collaboration, and protein–protein interaction networks have low-rank mesoscale structures, in the sense that a few learned latent motifs are able to reconstruct, infer, and denoise the edges of a network. We hypothesize that such low-rank mesoscale structures are a common feature of networks beyond the examined networks.

IFDS Postdoctoral Fellow Positions Available at the University of Washington

Deadline Extended!

The NSF Institute for Foundations of Data Science (IFDS) at the University of Washington (UW), Seattle, is seeking applications for one or more Postdoctoral Fellow positions. This is an ideal position for candidates interested in interdisciplinary research under the supervision of at least two faculty members of IFDS, which brings together researchers at the interface of mathematics, statistics, theoretical computer science, and electrical engineering. A unique benefit is the rich set of collaborative opportunities available. IFDS at UW operates in partnership with groups at the University of Wisconsin-Madison, the University of California at Santa Cruz, and the University of Chicago, and is supported by the NSF TRIPODS program. Initial appointment is for one year, with the possibility of renewal. Appropriate travel funds will be provided.

The ideal candidate will have a PhD in computer science, statistics, mathematics, engineering or a related field, with expertise in machine learning and data science. Desirable qualities include the ability to work effectively both independently and in a team, good communication skills, and a record of interdisciplinary collaborations.

In their cover letter, applicants should make sure to indicate potential faculty mentors (primary and secondary) at UW IFDS (see here for UW core faculty that can serve as main mentors, and here for broader affiliate members).

Full consideration will be given to applications received by January 30, 2024. The expected start date is in summer 2024, but earlier start dates will be considered. Selected candidates will be invited to short virtual (Zoom) interviews. For questions regarding the position, please contact the IFDS director Prof. Maryam Fazel at mfazel@uw.edu.

The base salary range for this position will have a full-time monthly salary range of $5880 – $6580 per month, commensurate with experience and qualifications, or as mandated by a U.S. Department of Labor prevailing wage determination.

Equal Employment Opportunity Statement

The University of Washington is an affirmative action and equal opportunity employer. All qualified applicants will receive consideration for employment without regard to race, color, creed, religion, national origin, sex, sexual orientation, marital status, pregnancy, genetic information, gender identity or expression, age, disability, or protected veteran status.

Commitment to Diversity

The University of Washington is committed to building diversity among its faculty, librarian, staff, and student communities, and articulates that commitment in the UW Diversity Blueprint (http://www.washington.edu/diversity/diversity-blueprint/). Additionally, the University’s Faculty Code recognizes faculty efforts in research, teaching and/or service that address diversity and equal opportunity as important contributions to a faculty member’s academic profile and

responsibilities (https://www.washington.edu/admin/rules/policies/FCG/FCCH24.html#2432)

Karan Srivastava

Karan Srivastava (Mathematics), advised by Jordan Ellenberg (Mathematics) is interested in applying machine learning techniques to generate interpretable, generalizable data for gaining a deeper understanding of problems in pure mathematics. Currently, he is working on generating examples in additive combinatorics and convex geometry using deep reinforcement learning.

Karan Srivastava (Mathematics), advised by Jordan Ellenberg (Mathematics) is interested in applying machine learning techniques to generate interpretable, generalizable data for gaining a deeper understanding of problems in pure mathematics. Currently, he is working on generating examples in additive combinatorics and convex geometry using deep reinforcement learning.

Joe Shenouda

Joe Shenouda (Electrical and Computer Engineering) advised by Rob Nowak (ECE), Kangwook Lee (ECE) and Stephen Wright (Computer Sciences) is interested in developing a theoretical understanding of deep learning. Currently, he is working towards precisely characterising the benefits of depth in deep neural networks.

Joe Shenouda (Electrical and Computer Engineering) advised by Rob Nowak (ECE), Kangwook Lee (ECE) and Stephen Wright (Computer Sciences) is interested in developing a theoretical understanding of deep learning. Currently, he is working towards precisely characterising the benefits of depth in deep neural networks.

Alex Hayes

Alex Hayes (Statistics), advised by Keith Levin (Statistics) and Karl Rohe (Statistics), is interested in causal inference on networks. He is currently working on methods to estimate peer-to-peer contagion in noisily-observed networks.

Alex Hayes (Statistics), advised by Keith Levin (Statistics) and Karl Rohe (Statistics), is interested in causal inference on networks. He is currently working on methods to estimate peer-to-peer contagion in noisily-observed networks.

Matthew Zurek

Matthew Zurek (Computer Sciences), advised by Yudong Chen (Computer Sciences) and Simon Du (Computer Science and Engineering) is interested in reinforcement learning theory. He is currently working on algorithms with improved instance-dependent sample complexities.

Matthew Zurek (Computer Sciences), advised by Yudong Chen (Computer Sciences) and Simon Du (Computer Science and Engineering) is interested in reinforcement learning theory. He is currently working on algorithms with improved instance-dependent sample complexities.

Jitian Zhao

Jitian Zhao (Statistics), advised by Karl Rohe (Statistics) and Fred Sala (Computer Sciences) is interested in graph analysis and non-Euclidean models. She is currently working on community detection and interpretation under the setting of large directed anonymous network.

Jitian Zhao (Statistics), advised by Karl Rohe (Statistics) and Fred Sala (Computer Sciences) is interested in graph analysis and non-Euclidean models. She is currently working on community detection and interpretation under the setting of large directed anonymous network.

Thanasis Pittas

Thanasis Pittas (Computer Science), advised by Ilias Diakonikolas (Computer Science) is working on robust statistics. His aim is to design efficient algorithms that can tolerate a constant fraction of the data being corrupted. The significance of computational efficiency arises from the high-dimensional nature of datasets in modern applications. To complete our theoretical understanding, it is also imperative to study the inherent trade-offs between computational efficiency and statistical performance of these algorithms.

Thanasis Pittas (Computer Science), advised by Ilias Diakonikolas (Computer Science) is working on robust statistics. His aim is to design efficient algorithms that can tolerate a constant fraction of the data being corrupted. The significance of computational efficiency arises from the high-dimensional nature of datasets in modern applications. To complete our theoretical understanding, it is also imperative to study the inherent trade-offs between computational efficiency and statistical performance of these algorithms.

William Powell

William Powell (Mathematics), advised by Hanbaek Lyu (Mathematics) and Qiaomin Xie (Industrial and Systems Engineering) is interested in stochastic optimization. His current work focuses on a variance reduced optimization algorithm in the context of dependent data sampling.

William Powell (Mathematics), advised by Hanbaek Lyu (Mathematics) and Qiaomin Xie (Industrial and Systems Engineering) is interested in stochastic optimization. His current work focuses on a variance reduced optimization algorithm in the context of dependent data sampling.

Wisconsin Funds 8 RAs for Fall 2023

The UW-Madison site of IFDS is funding several Research Assistants during Fall 2023 to collaborate across disciplines on IFDS research. Each one is advised by a primary and a secondary adviser, all of them members of IFDS.

Karan Srivastava

Wisconsin

Joe Shenouda

Wisconsin

Alex Hayes

Wisconsin

Matthew Zurek

Wisconsin

Jitian Zhao

Wisconsin

Thanasis Pittas

Wisconsin

William Powell

Wisconsin

Ziqian Lin

Wisconsin

Ziqian Lin

Ziqian Lin (Computer Science), advised by Kangwook Lee (Electrical and Computer Engineering) and Hanbaek Lyu (Mathematics), works on the intersection of machine learning and deep learning. Currently, he is working on model compositions of frozen pre-trained models and NLP topics including understanding the phenomena of in-context learning, and watermarking LLMs.

Ziqian Lin (Computer Science), advised by Kangwook Lee (Electrical and Computer Engineering) and Hanbaek Lyu (Mathematics), works on the intersection of machine learning and deep learning. Currently, he is working on model compositions of frozen pre-trained models and NLP topics including understanding the phenomena of in-context learning, and watermarking LLMs.

U Washington IFDS faculty Dmitriy Drusvyatskiy receives the SIAM Activity Group on Optimization Best Paper Prize

We are pleased to announce that Dmitriy Drusvyatskiy, Professor of Mathematics, is a co recipient of the SIAM Activity Group on Optimization Best Paper Prize, together with Damek Davis, for the paper "Stochastic model-based minimization of weakly convex functions." This prize is awarded every three years to the author(s) of the most outstanding paper, as determined by the prize committee, on a topic in optimization published in the four calendar years preceding the award year. Congratulations Dima!"

IFDS workshop brings together data science experts to explore ways of making algorithms that learn from data more robust and resilient

The workshop focused on exploring “distributional robustness.” This is a promising framework and research area in data science aimed at addressing complex shifts and changes in data, which are fielded by automated devices and processes such as the algorithms used in AI and machine learning. Read more…

IFDS Affiliates Publish New Works on Robustness and Optimization

Available online and free to download.

Optimization for Data Analysis

by Stephen Wright and Benjamin Recht

Published by Cambridge University Press

IFDS Welcomes Postdoctoral Affiliates for 2022-23

The ranks of IFDS Postdocs have grown remarkably in 2022-23 – an unprecedented 11 postdoctoral researchers are now affiliated with IFDS. Some have already been with us for a year, some have just joined. One (Lijun Ding) has moved from IFDS at Washington to IFDS at Wisconsin.

We take this opportunity to introduce the current cohort of postdocs and share a little information about each.

Andrew Lowy

Wisconsin

Natalie Frank

Washington

Mo Zhou

Washington

Libin Zhu

Washington

Tianxiao Shen

Washington

Jeongyeol Kwon

Wisconsin

Sushrut Karmalkar

Wisconsin

Ruhui Jin

Wisconsin

David Clancy

Wisconsin

Jake Soloff

Chicago

Jasper Lee

Wisconsin

Greg Canal

Wisconsin

Ahmet Alacaoglu

Wisconsin

Jeongyeol Kwon

Jeongyeol Kwon (jeongyeol.kwon@wisc.edu) joined UW-Madison in September 2022. He is doing a postdoc with Robert Nowak. He completed his PhD at The University of Texas at Austin in August 2022, advised by Constantine Caramanis.

Jeongyeol is broadly interested in theoretical aspects of machine learning and optimization. During his Ph.D., Jeongyeol has focused on fundamental questions arising from statistical inference and sequential decision making in the presence of latent variables. In his earlier PhD years, he worked on the analysis of the Expectation-Maximization algorithm and showed its convergence and statistical optimality properties. More recently, he has been more involved in reinforcement learning theory with partial observations inspired by real-world examples. He plans to enlarge his scope to more diverse research topics including stochastic optimization and more practical approaches for RL and other related problems.

Sushrut Karmalkar

Sushrut Karmalkar joined UW-Madison as a postdoc in September 2021, mentored by Ilias Diakonikolas in the Department of Computer Sciences and supported by the 2021 CI Fellowship. He completed his Ph.D. at The University of Texas at Austin, advised by Prof. Adam Klivans.

During his Ph.D., Sushrut worked on various aspects of the theory of machine learning, including algorithmic robust statistics, theoretical guarantees for neural networks, and solving inverse problems via generative models.

During the first year of his postdoc, he focused on understanding the problem of sparse mean estimation in the presence of various, extremely aggressive noise models. In this coming year, he plans to work on getting stronger lower bounds for these problems as well as work on getting improved guarantees for slightly weaker noise models.

Sushrut is on the job market this year (2022-2023)!

Ruhui Jin

Ruhui Jin (rjin@math.wisc.edu) joined UW-Madison in August 2022. She is a Van Vleck postdoc in the Department of Mathematics, hosted by Qin Li. She is partly supported by IFDS. She completed her PhD in mathematics from UT-Austin in 2022, advised by Rachel Ward.

Her research is in mathematics of data science. Specifically, her thesis focused on dimensionality reduction for tensor-structured data. Currently, she is broadly interested in data-driven methods for learning complex systems.

David Clancy

David Clancy (dclancy@math.wisc.edu) joined UW-Madison in August of 2022. He is doing a postdoc with Hanbaek Lyu and Sebastian Roch in Mathematics and is partly supported by IFDS. He completed his PhD in mathematics at the University of Washington in Seattle working under the supervision of Soumik Pal. During graduate school he worked on problems related to the metric-measure space structure of sparse random graphs with small surplus as their size grows large. He plans to investigate a wider range of topics related to the component structure of random graphs with an underlying community structure as well as inference problems on trees and graphs.

David Clancy (dclancy@math.wisc.edu) joined UW-Madison in August of 2022. He is doing a postdoc with Hanbaek Lyu and Sebastian Roch in Mathematics and is partly supported by IFDS. He completed his PhD in mathematics at the University of Washington in Seattle working under the supervision of Soumik Pal. During graduate school he worked on problems related to the metric-measure space structure of sparse random graphs with small surplus as their size grows large. He plans to investigate a wider range of topics related to the component structure of random graphs with an underlying community structure as well as inference problems on trees and graphs.

Jake Soloff

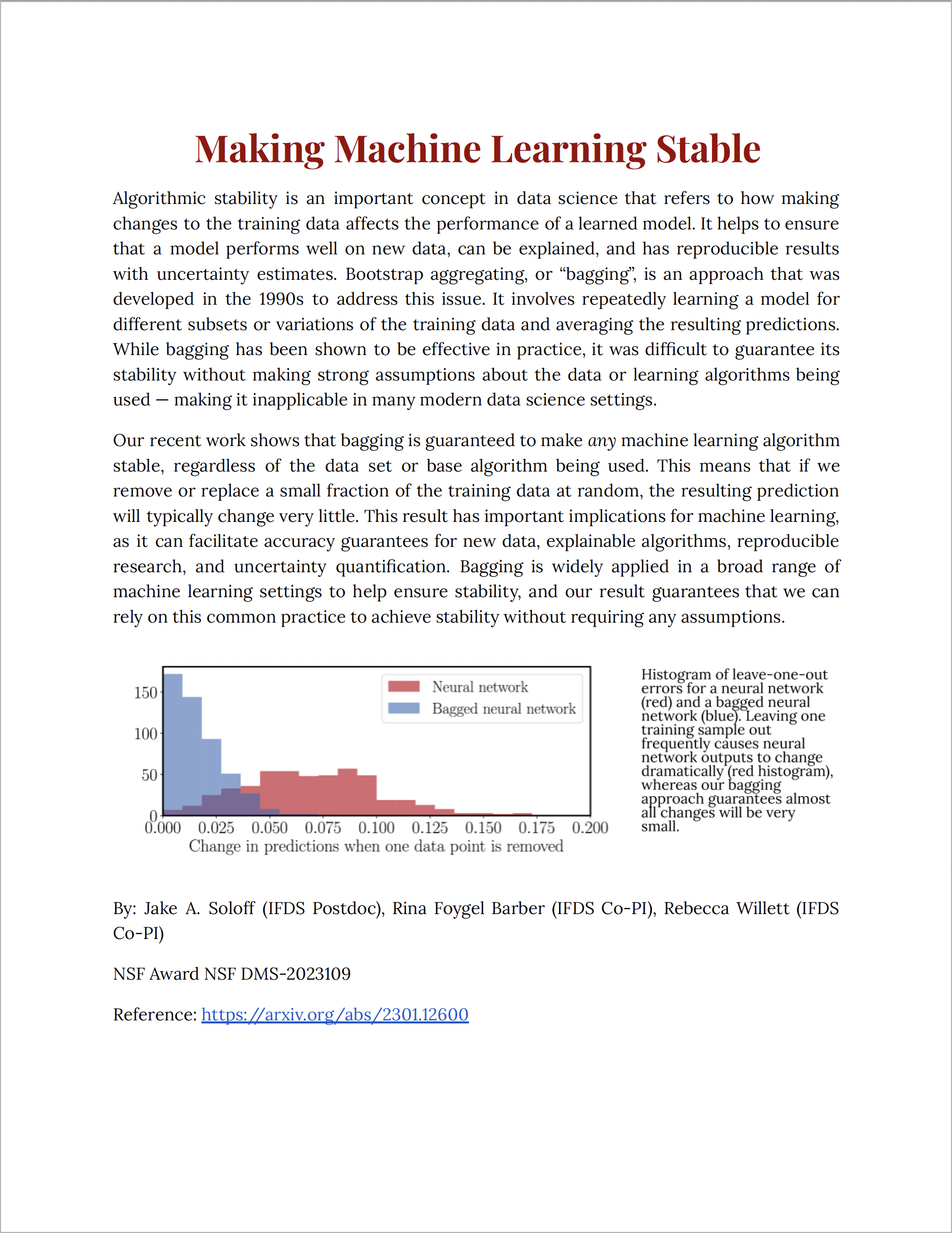

Jake is a postdoctoral researcher working with Rina Foygel Barber and Rebecca Willett in the Department of Statistics at the University of Chicago. Previously, he obtained my PhD from the Department of Statistics at UC Berkeley, co-advised by Aditya Guntuboyina and Michael I. Jordan. Jake received his ScB in mathematics from Brown University in 2016.

Jake is a postdoctoral researcher working with Rina Foygel Barber and Rebecca Willett in the Department of Statistics at the University of Chicago. Previously, he obtained my PhD from the Department of Statistics at UC Berkeley, co-advised by Aditya Guntuboyina and Michael I. Jordan. Jake received his ScB in mathematics from Brown University in 2016.

Ross Baczar

Ross Boczar (www.rossboczar.com, rjboczar@uw.edu) is a Postdoctoral Scholar at the University of Washington, associated with the Department of Electrical and Computer Engineering, the Institute for Foundations of Data Science, and the eScience Institute. He is advised by Prof. Maryam Fazel and Prof. Lillian J. Ratliff. His research interests include control theory, statistical learning theory, optimization, and other areas of applied mathematics. Recently, he has been exploring adversarial learning scenarios where a group of colluding users can learn and then exploit a firm’s deployed ML classifier.

Ross Boczar (www.rossboczar.com, rjboczar@uw.edu) is a Postdoctoral Scholar at the University of Washington, associated with the Department of Electrical and Computer Engineering, the Institute for Foundations of Data Science, and the eScience Institute. He is advised by Prof. Maryam Fazel and Prof. Lillian J. Ratliff. His research interests include control theory, statistical learning theory, optimization, and other areas of applied mathematics. Recently, he has been exploring adversarial learning scenarios where a group of colluding users can learn and then exploit a firm’s deployed ML classifier.

Stephen Mussmann

Stephen Mussmann (somussmann@gmail.com) is an IFDS postdoc (started September 2021) at the University of Washington working with Ludwig Schmidt and Kevin Jamieson. Steve received his Ph.D. in Computer Science from Stanford University, advised by Percy Liang.

Stephen Mussmann (somussmann@gmail.com) is an IFDS postdoc (started September 2021) at the University of Washington working with Ludwig Schmidt and Kevin Jamieson. Steve received his Ph.D. in Computer Science from Stanford University, advised by Percy Liang.

Steve researches data selection for machine learning; including work in fields such as sub areas such as active learning, adaptive data collection, and data subset selection. During his Ph.D., Steve primarily focused on active learning: how to effectively select maximally informative data to collect or annotate. During his postdoc, Steve has worked on data pruning: choosing a small subset of a dataset such that models trained on that subset enjoy the same performance as models trained on the full dataset.

Steve is applying for academic research jobs this year (2022-2023)!

IFDS Workshop on Distributional Robustness in Data Science

A number of domain applications of data science, machine learning, mathematical optimization, and control have underscored the importance of the assumptions on the data generating mechanisms, changes, and biases. Distributional robustness has emerged as one promising framework to address some of these challenges. This topical workshop under the auspices of the Institute for Foundations of Data Science, an NSF TRIPODS institute, will survey the mathematical, statistical, and algorithmic foundations as well as recent advances at the frontiers of this research area. The workshop features invited talks as well as shorter talk by junior researchers and a social event to foster further discussions.

IFDS Workshop on Distributional Robustness in Data Science

August 4-6, 2022

University of Washington, Seattle, WA

A number of domain applications of data science, machine learning, mathematical optimization, and control have underscored the importance of the assumptions on the data generating mechanisms, changes, and biases. Distributional robustness has emerged as one promising framework to address some of these challenges. This topical workshop under the auspices of the Institute for Foundations of Data Science, an NSF TRIPODS institute, will survey the mathematical, statistical, and algorithmic foundations as well as recent advances at the frontiers of this research area. The workshop features invited talks as well as shorter talk by junior researchers and a social event to foster further discussions.

Central themes of the workshop include:

- Risk measures and distributional robustness for decision making

- Distributional shifts in real-world domain applications

- Optimization algorithms for distributionally robust machine learning

- Distributionally robust imitation learning and reinforcement learning

- Learning theoretic statistical guarantees for distributional robustness

Ben Teo

Research interests in Statistical Phylogenetics. Working with Prof. Cecile Ane.

Research interests in Statistical Phylogenetics. Working with Prof. Cecile Ane.

Thanasis Pittas

A PhD student in the Computer Sciences Department at the University of Wisconsin–Madison. Thanasis is advised by Prof. Ilias Diakonikolas. He works on theoretical machine learning and robust statistics.

A PhD student in the Computer Sciences Department at the University of Wisconsin–Madison. Thanasis is advised by Prof. Ilias Diakonikolas. He works on theoretical machine learning and robust statistics.

Shubham Kumar Bharti

Shubham Kumar Bharti (Computer Science), advised by Jerry Zhu (Computer Science), and Kangwook Lee (Electrical and Computer Engineering), is interested in Reinforcement Learning, Fairness and Machine Teaching. His recent work focuses on fairness problems in sequential decision making. Currently, he is working on defenses against trojan attacks in Reinforcement Learning.

Shubham Kumar Bharti (Computer Science), advised by Jerry Zhu (Computer Science), and Kangwook Lee (Electrical and Computer Engineering), is interested in Reinforcement Learning, Fairness and Machine Teaching. His recent work focuses on fairness problems in sequential decision making. Currently, he is working on defenses against trojan attacks in Reinforcement Learning.

Shuyan Li

Shuyao Li (Computer Science), advised by Stephen Wright (Computer Science), Jelena Diakonikolas (Computer Science), and Ilias Diakonikolas (Computer Science), works on optimization and machine learning. Currently, he focuses on second order guarantee of stochastic optimization algorithms in robust learning settings.

Shuyao Li (Computer Science), advised by Stephen Wright (Computer Science), Jelena Diakonikolas (Computer Science), and Ilias Diakonikolas (Computer Science), works on optimization and machine learning. Currently, he focuses on second order guarantee of stochastic optimization algorithms in robust learning settings.

Jiaxin Hu

Jiaxin Hu (Statistics), advised by Miaoyan Wang (Statistics) and Jerry Zhu (Computer Science), works on statistical machine learning. Currently, she focuses on tensor/matrix data modeling and analysis with applications in neuroscience and social networks.

Jiaxin Hu (Statistics), advised by Miaoyan Wang (Statistics) and Jerry Zhu (Computer Science), works on statistical machine learning. Currently, she focuses on tensor/matrix data modeling and analysis with applications in neuroscience and social networks.

Max Hill

Max Hill (Mathematics), advised by Sebastien Roch (Mathematics) and Cecile Ane (Statistics), works in probability and mathematical phylogenetics. His recent work focuses on the impact of recombination in phylogenetic tree estimation.

Max Hill (Mathematics), advised by Sebastien Roch (Mathematics) and Cecile Ane (Statistics), works in probability and mathematical phylogenetics. His recent work focuses on the impact of recombination in phylogenetic tree estimation.

Sijia Fang

Sijia Fang (Statistics), advised by Karl Rohe (Statistics) and Sebastian Roch (Mathematics), works on social network and spectral analysis. More specifically, she is interested in hierarchical structures in social networks. She is also interested in phylogenetic tree and network recovery problems.

Sijia Fang (Statistics), advised by Karl Rohe (Statistics) and Sebastian Roch (Mathematics), works on social network and spectral analysis. More specifically, she is interested in hierarchical structures in social networks. She is also interested in phylogenetic tree and network recovery problems.

Zhiyan Ding

Zhiyan Ding (Mathematics), advised by Qin Li (Mathematics), works on applied and computational mathematics. More specifically, he uses PDE analysis tools for analyzing machine learning algorithms, such as Bayesian sampling and over-parameterized neural networks. The PDE tools, including gradient flow equation, and mean-field analysis, are helpful in formulating the machine (deep) learning algorithms into certain mathematical descriptions that are easier to handle.

Zhiyan Ding (Mathematics), advised by Qin Li (Mathematics), works on applied and computational mathematics. More specifically, he uses PDE analysis tools for analyzing machine learning algorithms, such as Bayesian sampling and over-parameterized neural networks. The PDE tools, including gradient flow equation, and mean-field analysis, are helpful in formulating the machine (deep) learning algorithms into certain mathematical descriptions that are easier to handle.

Jasper Lee

Jasper Lee joined UW-Madison as a postdoc in August 2021, mentored by Ilias Diakonikolas in the Department of Computer Sciences and partly supported by IFDS. He completed his PhD at Brown University, working with Paul Valiant. His thesis work revisited and settled a basic problem in statistics: given samples from an unknown 1-dimensional probability distribution, what is the best way to estimate the mean of the distribution, with optimal finite-sample and high probability guarantees? Perhaps surprisingly, the conventional method of taking the average of samples is sub-optimal, and Jasper’s thesis work provided the first provably optimal “sub-Gaussian” 1-dimensional mean estimator under minimal assumptions. Jasper’s current research focuses on revisiting other foundational statistical problems and solving them also to optimality. He is additionally pursuing directions in the adjacent area of algorithmic robust statistics.

Jasper Lee joined UW-Madison as a postdoc in August 2021, mentored by Ilias Diakonikolas in the Department of Computer Sciences and partly supported by IFDS. He completed his PhD at Brown University, working with Paul Valiant. His thesis work revisited and settled a basic problem in statistics: given samples from an unknown 1-dimensional probability distribution, what is the best way to estimate the mean of the distribution, with optimal finite-sample and high probability guarantees? Perhaps surprisingly, the conventional method of taking the average of samples is sub-optimal, and Jasper’s thesis work provided the first provably optimal “sub-Gaussian” 1-dimensional mean estimator under minimal assumptions. Jasper’s current research focuses on revisiting other foundational statistical problems and solving them also to optimality. He is additionally pursuing directions in the adjacent area of algorithmic robust statistics.

Julian Katz-Samuels

Julian’s research focuses on designing practical machine learning algorithms that adaptively collect data to accelerate learning. My recent research interests include active learning, multi-armed bandits, black-box optimization, and out-of-distribution detection using deep neural networks. I am also very interested in machine learning applications that promote the social good.

Julian’s research focuses on designing practical machine learning algorithms that adaptively collect data to accelerate learning. My recent research interests include active learning, multi-armed bandits, black-box optimization, and out-of-distribution detection using deep neural networks. I am also very interested in machine learning applications that promote the social good.

Greg Canal

Greg Canal (gcanal@wisc.edu) joined UW-Madison in September 2021. He is doing a postdoc with Rob Nowak in Electrical and Computer Engineering, partly supported by IFDS. He completed his PhD at Georgia Tech in Electrical and Computer Engineering in 2021. For his thesis, he developed and analyzed new active machine learning algorithms inspired by feedback coding theory. During his first year as a postdoc he worked on a new approach for multi-user recommender systems, and as he continues into his second year he plans on exploring new active learning algorithms for deep neural networks.

Greg Canal (gcanal@wisc.edu) joined UW-Madison in September 2021. He is doing a postdoc with Rob Nowak in Electrical and Computer Engineering, partly supported by IFDS. He completed his PhD at Georgia Tech in Electrical and Computer Engineering in 2021. For his thesis, he developed and analyzed new active machine learning algorithms inspired by feedback coding theory. During his first year as a postdoc he worked on a new approach for multi-user recommender systems, and as he continues into his second year he plans on exploring new active learning algorithms for deep neural networks.

Ahmet Alacaoglu

Ahmet Alacaoglu (alacaoglu@wisc.edu) joined UW–Madison in September 2021. He is doing a postdoc with Stephen J. Wright, partly supported by IFDS. He completed his PhD at EPFL, Switzerland in Computer and Communication Sciences in 2021. For his thesis, he developed and analyzed randomized first-order primal-dual algorithms for convex minimization and min-max optimization. During his postdoc, he is working on nonconvex stochastic optimization and investigating the connections between optimization theory and reinforcement learning.

Ahmet Alacaoglu (alacaoglu@wisc.edu) joined UW–Madison in September 2021. He is doing a postdoc with Stephen J. Wright, partly supported by IFDS. He completed his PhD at EPFL, Switzerland in Computer and Communication Sciences in 2021. For his thesis, he developed and analyzed randomized first-order primal-dual algorithms for convex minimization and min-max optimization. During his postdoc, he is working on nonconvex stochastic optimization and investigating the connections between optimization theory and reinforcement learning.

Ahmet is on the academic job market (2022-2023)!

Lijun Ding

Lijun Ding (lijunbrianding@gmail.com) joined the University of Wisconsin-Madison (UW-Madison) in September 2022 as an IFDS postdoc with Stephen J. Wright. Before joining IFDS at UW-Madison, He was an IFDS postdoc with Dmitry Drusvyatskiy, and Maryam Fazel at the University of Washington. He obtained his Ph.D. in Operations Research at Cornell University, advised by Yudong Chen and Madeleine Udell.

Lijun’s research lies at the intersection of optimization, statistics, and data science. By exploring ideas and techniques in statistical learning theory, convex analysis, and projection-free optimization, he analyses and designs efficient and scalable algorithms for classical semidefinite programming and modern nonconvex statistical problems. He also studies the interplay between model overparametrization, algorithmic regularization, and model generalization via the lens of matrix factorization. He plans to explore a wider range of topics at this intersection during his appointment at UW-Madison.

He is on the 2022 – 23 job market!

Yiding Chen

Yiding Chen (Computer Sciences), advised by Jerry Zhu (Computer Sciences) and collaborating with Po-ling Loh (Electrical and Computer Engineering), is doing research on adversarial machine learning and robust machine learning. In particular, he focuses on robust learning in high dimension. He is also interested in the intersection of adversarial machine learning and control theory.

Yiding Chen (Computer Sciences), advised by Jerry Zhu (Computer Sciences) and collaborating with Po-ling Loh (Electrical and Computer Engineering), is doing research on adversarial machine learning and robust machine learning. In particular, he focuses on robust learning in high dimension. He is also interested in the intersection of adversarial machine learning and control theory.

Brandon Legried

Brandon Legried (Mathematics), advised by Sebastien Roch and working with Cecile Ane (Botany and Statistics), is working on mathematical statistics with applications to computational biology. He is studying evolutionary history, usually depicted with a tree. As an inference problem, it is interesting to view extant species information as observed data and reconstruct tree topologies or states of evolving features (such as a DNA sequence). We look to achieve results that extend across many model assumptions. The mathematical challenges involved lie at the intersection of probability and optimization.

Brandon Legried (Mathematics), advised by Sebastien Roch and working with Cecile Ane (Botany and Statistics), is working on mathematical statistics with applications to computational biology. He is studying evolutionary history, usually depicted with a tree. As an inference problem, it is interesting to view extant species information as observed data and reconstruct tree topologies or states of evolving features (such as a DNA sequence). We look to achieve results that extend across many model assumptions. The mathematical challenges involved lie at the intersection of probability and optimization.

Ankit Pensia

Ankit Pensia (Computer Science), advised by Po-Ling Loh (Statistics) and Varun Jog (Electrical and Computer Engineering) is working on projects in robust machine learning. His focus is on designing statistically and computationally efficient estimators that perform well even when the training data itself is corrupted. Traditional algorithms don’t perform well in the presence of noise: they are either slow or incur large error. As the data today is high-dimensional and usually corrupted, a better understanding of fast and robust algorithms would lead to better performance in scientific and practical applications.

Ankit Pensia (Computer Science), advised by Po-Ling Loh (Statistics) and Varun Jog (Electrical and Computer Engineering) is working on projects in robust machine learning. His focus is on designing statistically and computationally efficient estimators that perform well even when the training data itself is corrupted. Traditional algorithms don’t perform well in the presence of noise: they are either slow or incur large error. As the data today is high-dimensional and usually corrupted, a better understanding of fast and robust algorithms would lead to better performance in scientific and practical applications.

Blake Mason

Blake Mason (Electrical and Computer Engineering), advised by Rob Nowak (Electrical and Computer Engineering) and Jordan Ellenberg (Mathematics), is investigating problems of learning from comparative data, such as ordinal comparisons and similarity/dissimilarity judgements. In particular, he is studying metric learning and clustering problems in this setting with applications to personalized education, active learning, and representation learning. Additionally, he studies approximate optimization techniques for extreme classification applications.

Blake Mason (Electrical and Computer Engineering), advised by Rob Nowak (Electrical and Computer Engineering) and Jordan Ellenberg (Mathematics), is investigating problems of learning from comparative data, such as ordinal comparisons and similarity/dissimilarity judgements. In particular, he is studying metric learning and clustering problems in this setting with applications to personalized education, active learning, and representation learning. Additionally, he studies approximate optimization techniques for extreme classification applications.

Yuchen Zeng

Yuchen Zeng (Computer Science), advised by Kangwook Lee (Electrical and Computer Engineering) and Stephen J. Wright (Computer Science), is interested in Trustworthy AI with a particular focus on fairness. Her recent work investigates training fair classifiers from decentralized data. Currently, she is working on developing a new fairness notion that considers dynamics.

Yuchen Zeng (Computer Science), advised by Kangwook Lee (Electrical and Computer Engineering) and Stephen J. Wright (Computer Science), is interested in Trustworthy AI with a particular focus on fairness. Her recent work investigates training fair classifiers from decentralized data. Currently, she is working on developing a new fairness notion that considers dynamics.

Stephen Wright honored with a 2022 Hilldale Award

As director of the Institute for the Foundations of Data Science, Steve Wright is dedicated to the development and teaching of the complex computer algorithms that underlie much of modern society. The institute, which has been supported by more than $6 million in federal funds under Wright, brings together dozens of researchers across computer science, math, statistics and other departments to advance this interdisciplinary work. Read more…

As director of the Institute for the Foundations of Data Science, Steve Wright is dedicated to the development and teaching of the complex computer algorithms that underlie much of modern society. The institute, which has been supported by more than $6 million in federal funds under Wright, brings together dozens of researchers across computer science, math, statistics and other departments to advance this interdisciplinary work. Read more…

Rodriguez elected as a 2022 ASA Fellow

We are pleased to announce that Abel Rodriguez, Professor of Statistics, has been elected as a Fellow of the American Statistical Association (ASA).

We are pleased to announce that Abel Rodriguez, Professor of Statistics, has been elected as a Fellow of the American Statistical Association (ASA).

ASA Fellows are named for their outstanding contributions to statistical science, leadership, and advancement of the field. This year, awardees will be recognized at the upcoming Joint Statistical Meetings (JSM) during the ASA President’s Address and Awards Ceremony. Congratulations on this prestigious recognition!

Sebastien Roch named Fellow of Institute of Mathematical Statistics

Sebastien Roch has been named a Fellow of the Institute of Mathematical Statistics (IMS). IMS is the main scientific society for probability and the mathematical end of statistics. Among its various activities, IMS publishes the Annals series of flagship journals of probability.

Sebastien Roch has been named a Fellow of the Institute of Mathematical Statistics (IMS). IMS is the main scientific society for probability and the mathematical end of statistics. Among its various activities, IMS publishes the Annals series of flagship journals of probability.

PIMS-IFDS-NSF Summer School in Optimal Transport

The PIMS- IFDS- NSF Summer School on Optimal Transport is happening at the University of Washington, Seattle, from June 19 till July 1, 2022.Registration is now open at through the PIMS event webpage. Please note that the deadline to apply for funded accommodation for junior participants is Feb15.Our main speakers are world leaders in the mathematics of optimal transport and its various fields of application.

- Alfred Galichon (NYU, USA)Inwon Kim (UCLA, USA)

- Jan Maas (IST, Austria)

- Felix Otto (Max Planck Institute, Germany)

- Gabriel Peyré (ENS, France).

- Geoffrey Schiebinger (UBC, Canada)

Details and abstracts can be found on the summer school webpage.

As part of this summer school, IFDS will host a Machine Learning (ML) Day, with a focus on ML applications of Optimal Transport, that will include talks and a panel discussion. Details will be announced here later.

Wisconsin Announces Fall ’21 and Spring ’22 RAs

The UW-Madison IFDS site is funding several Research Assistants during Fall 2021 and Spring 2022 to collaborate across disciplines on IFDS research. Each one is advised by a primary and a secondary adviser, all of them members of IFDS.

Jillian Fisher

Jillian Fisher (Statistics) works with Zaid Harchaoui (Statistics) and Yejin Choi (Allen School for CSE) on dataset biases and generative modeling. She is also interested in nonparametric and semiparametric statistical inference.

Yifang Chen

Yifang Chen (CSE) works is co-advised by Kevin Jamieson (CSE) and Simon Du (CSE). Yifang is an expert on algorithm design for active learning multi-armed bandits in the presence of adversarial corruptions. Recently she has been working with Kevin and Simon on sample efficient methods for multi-task learning.

Guanghao Ye

Guanghao Ye (CSE) works with Yin Tat Lee (CSE) and Dmitriy Drusvyatskiy (Mathematics) on designing faster algorithms for non-smooth non-convex optimization. He is interested in convex optimization. He developed the first nearly linear time algorithm for linear programs with small treewidth.

Guanghao Ye (CSE) works with Yin Tat Lee (CSE) and Dmitriy Drusvyatskiy (Mathematics) on designing faster algorithms for non-smooth non-convex optimization. He is interested in convex optimization. He developed the first nearly linear time algorithm for linear programs with small treewidth.

Josh Cutler

Josh Cutler (Mathematics) works with Dmitriy Drusvyatskiy (Mathematics) and Zaid Harchaoui (Statistics) on stochastic optimization for data science. His most recent work has focused on designing and analyzing algorithms for stochastic optimization problems with data distributions that evolve in time. Such problems appear routinely in machine learning and signal processing.

Josh Cutler (Mathematics) works with Dmitriy Drusvyatskiy (Mathematics) and Zaid Harchaoui (Statistics) on stochastic optimization for data science. His most recent work has focused on designing and analyzing algorithms for stochastic optimization problems with data distributions that evolve in time. Such problems appear routinely in machine learning and signal processing.

Romain Camilleri

Romain Camilleri (CSE) works with Kevin Jamieson (CSE), Maryam Fazel (ECE), and Lalit Jain (Foster School of Business). He is interested in robust high dimensional experimental design with adversarial arrivals. He also collaborates with IFDS student Zhihan Xiong (CSE).

Yue Sun

Yue Sun (ECE) works with Maryam Fazel (ECE) and Goiehran Mesbahi (Aeronautics), as well as collaborator Prof. Samet Oymak (UC Riverside). Yue is currently interested in two topics: (1) understanding the role of over-parametrization in meta-learning, and (2) gradient-based policy update methods for control, which connects the two fields of control theory and reinforcement learning.

Lang Liu

Lang Liu (Statistics) works with Zaid Harchaoui (Statistics) and Soumik Pal (Mathematics – Kantorovich Initiative) on statistical hypothesis testing and regularized optimal transport. He is also interested in statistical change detection and natural language processing.

Lang Liu (Statistics) works with Zaid Harchaoui (Statistics) and Soumik Pal (Mathematics – Kantorovich Initiative) on statistical hypothesis testing and regularized optimal transport. He is also interested in statistical change detection and natural language processing.

IFDS-MADLab Workshop

Statistical Approaches to Understanding Modern ML Methods

Aug 2-4, 2021

University of Wisconsin–Madison

When we use modern machine learning (ML) systems, the output often consists of a trained model with good performance on a test dataset. This satisfies some of our goals in performing data analysis, but leaves many unaddressed — for instance, we may want to build an understanding of the underlying phenomena, to provide uncertainty quantification about our conclusions, or to enforce constraints of safety, fairness, robustness, or privacy. As an example, classical statistical methods for quantifying a model’s variance rely on strong assumptions about the model — assumptions that can be difficult or impossible to verify for complex modern ML systems such as neural networks.

This workshop will focus on using statistical methods to understand, characterize, and design ML models — for instance, methods that probe “black-box” ML models (with few to no assumptions) to assess their statistical properties, or tools for developing likelihood-free and simulation-based inference. Central themes of the workshop may include:

Organizers

Participants

Schedule

| Morning | Conformal Prediction Methods | Speaker |

|---|---|---|

| 9:15-9:30 | Welcome and Introduction | |

| 9:30-10:15 | Conformal prediction, testing, and robustness | Vladimir Vovk |

| 10:15-11:00 | Conformalized Survival Analysis | Lihua Lei |

| 11:00-11:15 | Break | |

| 11:15-12:00 | Conformal prediction intervals for dynamic time series | Yao Xie |

| Afternoon | Challenges and Trade-offs in Deep Learning | Speaker |

| 2:00-2:45 | The Statistical Trade-offs of Generative Modeling with Deep Neural Networks | Zaid Harchaoui |

| 2:45-3:30 | The Deep Bootstrap Framework: Good Online Learners are Good Offline Generalizers | Hanie Sedghi |

| 3:30-3:45 | Break | |

| 3:45-4:30 | Uncovering the Unknowns of Deep Neural Networks: Challenges and Opportunities | Sharon Li |

| 5:00-8:00 | Reception at Tripp Commons, Memorial Union |

| Morning | Robust Learning | Speaker |

|---|---|---|

| 9:30-10:15 | Optimal doubly robust estimation of heterogeneous causal effects | Edward Kennedy |

| 10:15-11:00 | Robust W-GAN-Based Estimation Under Wasserstein Contamination | Po-Ling Loh |

| 11:00-11:15 | Break | |

| 11:15-12:00 | PAC Prediction Sets Under Distribution Shift | Osbert Bastani |

| Afternoon | Interpretation in Black-Box Learning | Speaker |

| 2:00-2:45 | Floodgate: Inference for Variable Importance with Machine Learning | Lucas Janson |

| 2:45-3:30 | From Predictions to Decisions: A Black-Box Approach to the Contextual Bandit Problem | Dylan Foster |

| 3:30-3:45 | Break | |

| 3:45-5:00 | **Lightning talks** by in-person participants | |

| Searching for Synergy in High-Dimensional Antibiotic Combinations | Jennifer Brennan (Seattle) | |

| Supervised tensor decomposition with features on multiple modes | Jiaxin Hu (Madison) | |

| Latent Preference Matrix Estimation with Graph Side Information | Changhun Jo (Madison) | |

| Nonconvex Factorization and Manifold Formulations are Almost Equivalent in Low-rank Matrix Optimization | Yuetian Luo (Madison) | |

| Excess Capacity and Backdoor Poisoning | Naren Manoj (TTIC) | |

| Risk bounds for regression and classification with structured feature maps | Andrew McRae (GaTech) | |

| Robust regression with covariate filtering: Heavy tails and adversarial contamination | Ankit Pensia (Madison) | |

| Derandomizing knockoffs | Zhimei Ren (Chicago) |

| Morning | Modern Statistical Methodologies pt I | Speaker |

|---|---|---|

| 9:30-10:15 | Discrete Splines: Another Look at Trend Filtering and Related Problems | Ryan Tibshirani |

| 10:15-11:00 | A quick tour of distribution-free post-hoc calibration | Aaditya Ramdas |

| 11:00-11:15 | Break | |

| 11:15-12:00 | Recovering from Biased Data: Can Fairness Constraints Improve Accuracy? | Avrim Blum |

| Afternoon | Modern Statistical Methodologies pt II | Speaker |

| 2:00-2:45 | Model-assisted analyses of cluster-randomized experiments | Peng Ding |

| 2:45-3:30 | Simulation-based inference: recent progress and open questions | Kyle Cranmer |

| 3:30-3:45 | Break | |

| 3:45-4:30 | Statistical challenges in Adversarially Robust Machine Learning | Kamalika Chaudhuri |

Slides

All slides for the workshop are included in the gallery below. Click on a poster to see it in full screen.

IFDS 2021 Summer School

Schedule

(all times CDT)

Click speaker name for video

Monday, July 26th

| Time | Speaker |

|---|---|

| 10:30-11:30 | Karl Rohe |

| 1:00-2:00 | Karl Rohe |

| 2:30-3:30 | Jose Rodriguez |

| 4:00-5:00 | Jose Rodriguez |

| 5:15-6:30 | Poster Session |

Tuesday, July 27th

| Time | Speaker |

|---|---|

| 10:30-11:30 | Cécile Ané |

| 1:00-2:00 | Cécile Ané |

| 2:30-3:30 | Miaoyan Wang |

| 4:00-5:00 | Miaoyan Wang |

| 5:15-6:30 | Poster Session |

Wednesday, July 28th

| Time | Speaker |

|---|---|

| 10:30-11:30 | Dimitris Papailiopoulos |

| 1:00-2:00 | Dimitris Papailiopoulos |

| 2:30-3:30 | Sebastien Bubeck |

| 4:00-5:00 | Sebastien Bubeck |

Thursday, July 29th

| Time | Speaker |

|---|---|

| 10:30-11:30 | Kevin Jamieson |

| 1:00-2:00 | Kevin Jamieson |

| 2:30-3:30 | Ilias Diakonikolas |

| 4:00-5:00 | Ilias Diakonikolas |

Friday, July 30th

| Time | Speaker |

|---|---|

| 10:30-11:30 | Rina Foygel-Barber |

| 1:00-2:00 | Rina Foygel-Barber |

AI4All @ Washington

The UW Instance of AI4ALL is an AI4ALL-sanctioned program run under the Taskar Center for Accessible Technology, an initiative by the UW Paul G. Allen School for Computer Science & Engineering. This year we were joined by faculty from IFDS at UW in teaching the summer cohort. More informations at <a href=”https://ai4all.cs.washington.edu/about-us/”>ai4all.cs.washington.edu</a>.

IFDS Summer School 2021: Registration Open!

The IFDS Summer School 2021, co-sponsored by MADLab, will take place on July 26-July 30, 2021. The summer school will introduce participants to a broad range of cutting-edge areas in modern data science with an eye towards fostering cross-disciplinary research. We have a fantastic lineup of lecturers:

* Cécile Ané (UW-Madison)

* Rina Foygel Barber (Chicago)

* Sebastien Bubeck (Microsoft Research)

* Ilias Diakonikolas (UW-Madison)

* Kevin Jamieson (Washington)

* Dimitris Papailiopoulos (UW-Madison)

* Jose Rodriguez (UW-Madison)

* Karle Rohe (UW-Madison)

* Miaoyan Wang (UW-Madison)

Registration for both virtual and in-person participation is now open. More details at: https://ifds.info/ifds-2021-summer-school/

Six Cluster Hires in Data Science at UW–Madison

IFDS is delighted to welcome six new faculty members at UW-Madison working in areas related to fundamental data science. All were hired as a result of a cluster hiring process proposed by the IFDS Phase 1 leadership at Wisconsin in 2017 and approved by UW-Madison leadership in 2019. Although the cluster hire was intended originally to hire only three faculty members in the TRIPODS areas of statistical, mathematical, and computational foundations of data science, three extra positions funded through other faculty lines were filled during the hiring process.

One of the new faculty members, Ramya Vinayak, joined the ECE Department in 2020. The other five will join in Fall 2021. They are:

These faculty will bring new strengths to IFDS and UW-Madison. We’re excited that they are joining us, and look forward to working with them in the years ahead!

Research Highlights: Robustness meets Algorithms

IFDS Affiliate Ilias Diakonikolas and collaborators recently published a survey article titled “Robustness Meets Algorithms” in the Research Highlights section of the Communications of the ACM. The article presents a high-level description of groundbreaking work by the authors, which developed the first robust learning algorithms for high-dimensional unsupervised learning problems, including for example robustly estimating the mean and covariance of a high-dimensional Gaussian (first published in FOCS’16/SICOMP’19). This work resolved a long-standing open problem in statistics and at the same time was the beginning of a new area, now known as “algorithmic robust statistics”. Ilias is currently finishing a book on the topic with Daniel Kane to be published by Cambridge University Press.

TRIPODS PI meeting

Fall 20 Wisconsin RAs

A gathering of leaders of the institutes funded under NSF’s TRIPODS program since 2017

Venue: Zoom and gather.town.

Thu 6/10/21: 11:30-2 EST and 3-5:30 EST (5 hours) • Fri 6/11/21: 11:30-2 EST and 3-5:30 EST (5 hours)

Format

Panel Nominations:

If you wish to be a panelist in the “major challenges” panel, please submit a 200-word abstract to the meeting co-chairs by 5/20/21. (If we receive more nominations than there are slots, a selection process involving all currently-funded TRIPODS institutes will be conducted.)

Panelists in the “industry” panel should be industry affiliates of a currently funded TRIPODS institute, or possibly members of an institute with a joint academic-industry appointment. Please mail your suggestions to the organizers, with a few words on what topics the nominee could cover.

Schedule

(all times EDT)

Thursday 6/10/2021

| Time (EDT) | Topic | Presenter |

| 11:30-12:00 | Introduction & NSF Presentation | Margaret Martonosi (CISE), Dawn Tilbury (ENG), Sean Jones (MPS) |

| 12:00-12:15 | Talk: IFDS (I) | Jelena Diakonikolas |

| 12:15-12:30 | Talk: FODSI (1) | Stefanie Jegelka |

| 12:30-12:45 | Talk: IDEAL | Ali Vakilian |

| 12:45-1:00 | Break | |

| 1:00-1:15 | Talk: GDSC | Aaron Wagner |

| 1:15-1:30 | Talk: Tufts Tripods | Misha Kilmer |

| 1:30-1:45 | Talk: Rutgers – DATA-INSPIRE | Konstantin Mischaikow |

| 1:45-2:00 | Talk: UMass | Patrick Flaherty |

| 2:00-3:00 | Lunch | |

| 3:00-4:00 | PANEL: Major Challenges in Fundamental Data Science | |

| 4:00-4:15 | Break | |

| 4:15-5:30 | POSTER SESSION | gather.town |

List of Institutes: https://nsf-tripods.org

Friday 6/11/2021

| Time (EDT) | Topic | Presenter |

| 11:30-11:45 | Talk: JHU | |

| 11:45-12:00 | Talk: FODSI (II) | Nika Haghtalab |

| 12:00-12:15 | Talk: IFDS (II) | Kevin Jamieson |

| 12:15-12:30 | Talk: PIFODS | Hamed Hassani |

| 12:30-12:45 | Break | |

| 12:45-1:00 | Talk: TETRAPODS UC-Davis | Rishi Chaudhuri |

| 1:00-1:15 | Talk: UIC | Anastasios Sidiropoulos |

| 1:15-1:30 | Talk: D4 Iowa State | Pavan Aduri |

| 1:30-1:45 | Talk: UIUC | Semih Cayci |

| 1:45-2:00 | Talk: Duke | Sayan Mukherjee |

| 2:00-3:00 | Lunch | |

| 3:00-3:15 | Talk: UT-Austin | Sujay Sanghavi |

| 3:15-3:30 | Talk: FIDS | Simon Foucart |

| 3:30-3:45 | Talk: FINPenn | Alejandro Ribiero |

| 3:45-4:45 | PANEL: Industry Adoption of Fundamental Data Science |

Rebecca Willett honored by SIAM

Rebecca Willett, IFDS PI at the University of Chicago, has been selected as a SIAM Fellow for 2021. She was recognized “For her contributions to mathematical foundations of machine learning, large-scale data science, and computational imaging.” https://www.siam.org/research-areas/detail/computational-science-and-numerical-analysis

Rebecca Willett, IFDS PI at the University of Chicago, has been selected as a SIAM Fellow for 2021. She was recognized “For her contributions to mathematical foundations of machine learning, large-scale data science, and computational imaging.” https://www.siam.org/research-areas/detail/computational-science-and-numerical-analysis IFDS team analyzes COVID-19 spread in Dane and Milwaukee county

A UW-Madison IFDS team (Nan Chen, Jordan Ellenberg, Xiao Hou and Qin Li), together with Song Gao, Yuhao Kang and Jingmeng Rao (UW-Madison, Geography), Kaiping Chen (UW-Madison, Life Sciences Communication) and Jonathan Patz (Global Health Institute), recently studied the COVID-19 spreading pattern, and its correlation with business foot traffic, race and ethnicity and age structure of sub-regions within Dane and Milwaukee county. The results are published on Proceedings of the National Academy of Sciences of the United States of America. (https://www.pnas.org/content/118/24/e2020524118)

A human mobility flow-augmented stochastic SEIR model was developed. When the model was combined with data assimilation and machine learning techniques, the team reconstructed the historical growth trajectories of COVID-19 infection in both counties. The results reveal different types of spatial heterogeneities (e.g., varying peak infection timing in different subregions) even within a county, suggesting a regionalization-based policy (e.g, testing and vaccination resource allocation) is necessary to mitigate the spread of COVID-19, and to prepare for future epidemics.

Data science workshops co-organized by IFDS members

Mary Silber

Po-Ling Loh

Jelena Diakonikolas

Rebecca Willett

Xiaoxia Wu

IFDS members have been active leaders in the data science community over the past year, organizing workshops with participants reflecting all core TRIPODS disciplines, covering a broad range of career stages, and advancing the participation of women. IFDS executive committee member Rebecca Willett co-organized a workshop on the Multifaceted Complexity of Machine Learning at the NSF Institute of Mathematical and Statistical Innovation, which featured IFDS faculty Po-Ling Loh and Jelena Diakonikolas as speakers. With the support of the Institute for Advanced Study Women and Mathematics Ambassador program, IFDS postdoc Xiaoxia (Shirley) Wu organized the Women in Theoretical Machine Learning Symposium, which again featured IFDS faculty Po-Ling Loh and Jelena Diakonikolas. Finally, IFDS faculty Rebecca Willett and Mary Silber co-organized the Graduate Research Opportunities for Women 2020 conference, which is aimed at female-identified undergraduate students who may be interested in pursuing a graduate degree in the mathematical sciences. The conference is open to undergraduates from U.S. colleges and universities, including international students. IFDS faculty members Rina Barber and Rebecca Willett were featured speakers.

Wisconsin funds 8 Summer RAs

For the first time, IFDS @ Wisconsin is sponsoring a Summer RA program in 2021. As with RAs sponsored under the usual Fall and Spring semester programs, Summer 2021 RAs will be working with members of IFDS at Wisconsin and elsewhere on fundamental data science research. They will also be participating and assisting with IFDS’s activities during the summer, including the Summer School and Workshop to be held in Madison in July and August 2021.

The Summer 2021 RAs are: